Our consultant Jenne Justin has been working at in-factory GmbH for 7 years and has been involved in 4 projects in the financial and automotive sectors.

One of our long-standing customers is a well-known car manufacturer in southern Germany, with whom we have been successfully carrying out projects for more than 15 years. Our consultant Jenne Justin was an important part of the test team. In this interview, she shares her experiences with us on the topics of testing according to ISTQB and improved quality assurance through automation.

A successful customer journey with in-factory

Hello Jenne, thank you for sharing your experiences with us. We helped our customer to create a successful customer journey. Can you tell us more about how you were involved?

Jenne: The project I was involved in was about the customer data area (CRM). For example, if a person wants to take a test drive, they register for an appointment via the manufacturer's homepage with contact details and information about the desired test vehicle. This data is loaded into an Oracle database via source systems using the DataVault approach. This database is also managed by in-factory GmbH. The loaded data is identified, aggregated and consolidated and forwarded to Salesforce. In-factory GmbH ensures that this personal data (including the complete history) can be retrieved in real time.

In customer projects, our colleagues from in-factory GmbH implement new functionalities, increase performance or implement optimizations. Thorough testing is essential to ensure that everything works smoothly and that the requirements are implemented. In order to meet our high quality standards, I was thoroughly trained before the start of the project and completed several days of training and ISTQB certification. Here I learned everything about the basics of testing, from documentation to responsibilities and systems.

So you were able to go straight from theory to practical implementation. How was the testing team structured in your project?

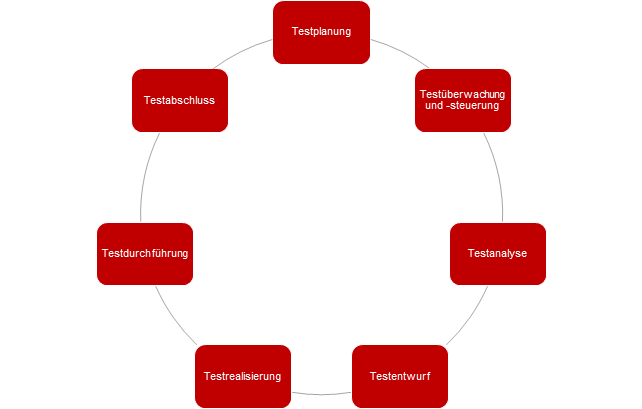

Jenne: There are several roles in the test team, such as the test managers, who are responsible for coordination, monitoring the next project plan and capacity allocation. There are also the testers who carry out the actual tests. The work is carried out in an agile manner according to the Scrum methodology: with a first sprint for development, a second sprint for testing the developed increments and a third sprint for the live launch. We are guided by the ISTQB test process.

Optimized test process through ISTQB conformity: From theory to practical implementation

The ISTQB test process - what does it mean?

Test process according to ISTQB

Jenne: The test process begins with the creation of a test plan. This is created in parallel with the development in the first sprint and also includes a test concept. The test plan is based on the stories created by the specialist department. An example of a story would be: A new API connection is needed to read data about the order information from the database. The test concept is developed based on this story. In addition, the objectives as well as the input and output criteria are defined, which the test must fulfill in order to ensure that the requirements of the story are met. In this example, this means that the API works and the transferred data is correct and also correctly formatted (data types). These steps are roughly defined in the test planning. In the next step, the test managers take over test monitoring and control. This coordination is important as many requirements have dependencies on other teams or stories.

The test analysis and the test design take place in the 2nd sprint. During the test analysis, the testers examine the test basis in more detail. This results in the test design, in which it is determined which test cases have priority, i.e. the logical sequence is determined and important prerequisites are checked, such as: Does the API work? Are the environments ready? Is the correct test data available? Furthermore, the design of the abstract test cases is documented. In the following test realization and test execution, the test cases are described in detail in the documentation tool (e.g. Jira) in the first step: What is the requirement of the specific test case? What is the expected result? The test execution then includes the actual tests, in which each individual test case is checked. If errors (bugs) occur, these are returned to development by defect management. Test completion is coordinated by the test managers. A work result is created here, which describes what was delivered, whether there were any open errors or deviations and whether the requirement was fulfilled. This is also documented for future projects.

To stay with the basics for a moment: How do the different environments affect testing?

Overview of test environments

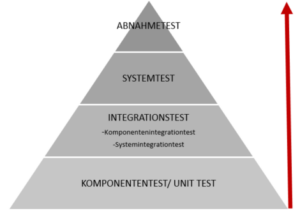

Jenne: Testing at the customer's premises is carried out at various levels. There are the so-called test levels: Component, integration, system and acceptance tests. These test levels take place in different environments, from the development environment to the production environment. In component testing (unit testing), our developers test on the development environment. The integration test takes place in the test environment. Only the functionality of the story is tested there. The system test is carried out on the integration environment 1. The integrated overall system is tested there. Not only the functionality, but also the efficiency and usability are tested, the so-called whitebox test procedure. The acceptance test takes place on integration environment 2, which is a production adjustment environment. Here, the complete system including interfaces is tested in a so-called black box test procedure.

The entire process is defined according to ISTQB and is used in a similar form in many projects.

Optimization through automation: increasing efficiency in the test process

In addition to the structure of the test process, increasing efficiency is an important factor. Can you share your experiences with us?

Jenne: We have optimized our testing process: In our customer project, automation in testing is important and makes sense, as it is an ongoing system in which optimizations and further developments are constantly being carried out. Automation also ensures that tests are carried out faster and more reliably, which leads to a higher quality end product.

Automation can be used to quickly and efficiently ensure that no problems occur with other, dependent functionalities as a result of optimizations or further developments. A Python framework was implemented at our customer to carry out the automation. Depending on the story, the practical effort required for automation is between 1 and 4 days. After that, the test stages and the retesting of bugs no longer have to be carried out manually, but can be controlled automatically by a framework. The requirements, such as the API calls, are embedded in the framework, test cases are defined and the expected result is recorded. When the framework is executed, the API calls are automatically executed on specific test data in the test database. All defined test cases in the framework are run through and it is checked whether the output data is correct. If there are discrepancies with the expected result, a report is automatically generated. In our project, every story is automated, which means that the testers no longer have to test manually.

Does this mean that the manual effort has been measurably reduced? Are there any other advantages?

Jenne: Yes, that's right, the manual effort has been measurably reduced. There is also another advantage: the framework runs automatically on all test environments every day and especially after each deployment. If a test case fails, a report is generated so that it is immediately clear if errors occurred during deployment or during the normal run and why. This saves a lot of time in the search for bugs and ensures continuous quality.

For each test completion, there is a set of automated test cases that were created during the sprint. This set is integrated into the entire framework. This means that it is no longer necessary to test manually - everything can be tested automatically at the push of a button. It is possible to run a single test case, the entire framework or just parts of it.

Thank you very much for the interesting interview! Do you have any final words for us?

Jenne: In conclusion, I can say that although the implementation was time-consuming at the beginning, the automation in testing has led to considerable time savings for the testers and an increase in quality.